How a machine learning model that powers a scorecard is built

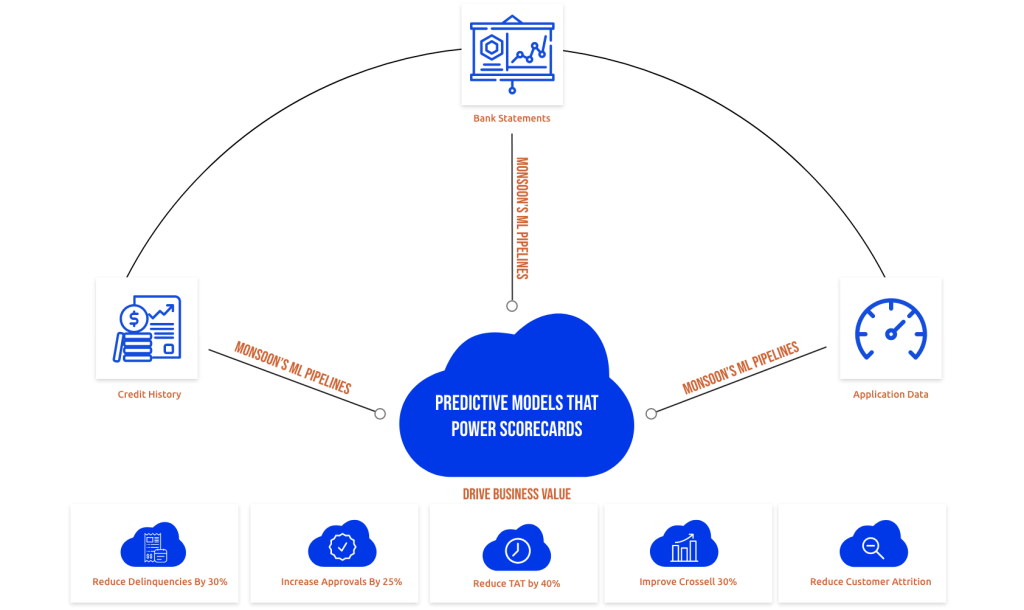

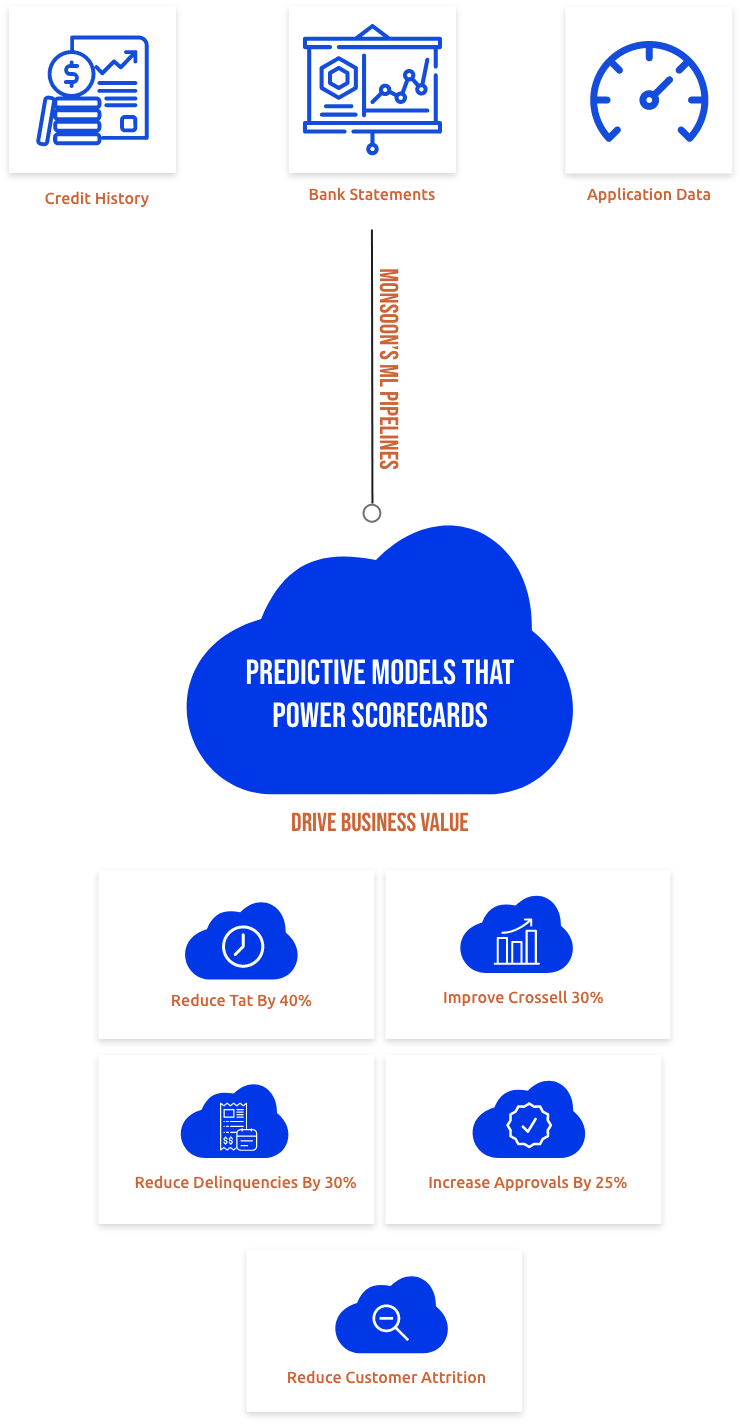

Monsoon’s ML Piplines

Data Analysis

Monsoon's proprietary pipelines analyze the uploaded data, check for information-leakage, analyze the data to high spot correlations and anomalous patterns within the data and prepare it for the next steps.

Modeling Strategy

Our pipelines crunch the data to build a range of modeling strategies from which the user can select. Good/bad-definitions, flag definitions, observation windows, inclusion criteria - the user can pick from range of strategies..

Feature Engineering

Monsoon's feature engineering pipeline uses a unique blend of domain knowledge and statistical inferencing to build thousands of features from each dataset capturing complex patterns that link data with response variables.

Feature Selection

Our state-of-the-art feature selection pipelines , some of which are proprietary, can distinguish between noise and genuinely powerful features that hold across time and sample. Only these features are selected for the final model.

Modeling

After optimizing hyperparameters over massive search spaces, multiple models are built and ensembled, optimizing a specific function picked from a range of cost-functions to achieve a "fit" with the business problem..

Validation

The candidate models are then validated across time and sub-sample to ensure that not only does the final model perform well across time, but that it also works well across different segments of the population it is being built for..

ML Model

The final model that is picked by our pipelines is then tested thoroughly to ensure that all constraints around latency, size, response and target deployment environments are catered to adequately..

Data Analysis

Monsoon's proprietary pipelines analyze the uploaded data, check for information-leakage, analyze the data to high spot correlations and anomalous patterns within the data and prepare it for the next steps.

Modeling Strategy

Our pipelines crunch the data to build a range of modeling strategies from which the user can select. Good/bad-definitions, flag definitions, observation windows, inclusion criteria - the user can pick from range of strategies.

Feature Engineering

Monsoon's feature engineering pipeline uses a unique blend of domain knowledge and statistical inferencing to build thousands of features from each dataset capturing complex patterns that link data with response variables.

Feature Selection

Our state-of-the-art feature selection pipelines , some of which are proprietary, can distinguish between noise and genuinely powerful features that hold across time and sample. Only these features are selected for the final model.

Modeling

After optimizing hyperparameters over massive search spaces, multiple models are built and ensembled, optimizing a specific function picked from a range of cost-functions to achieve a "fit" with the business problem.

Validation

The candidate models are then validated across time and sub-sample to ensure that not only does the final model perform well across time, but that it also works well across different segments of the population it is being built for.

ML Model

The final model that is picked by our pipelines is then tested thoroughly to ensure that all constraints around latency, size, response and target deployment environments are catered to adequately.

Data Analysis

Monsoon’s proprietary pipelines analyze the uploaded data, check for information-leakage, analyze the data to high spot correlations and anomalous patterns within the data and prepare it for the next steps.

Modeling Strategy

Our pipelines crunch the data to build a range of modeling strategies from which the user can select. Good/bad-definitions, flag definitions, observation windows, inclusion criteria – the user can pick from range of strategies.

Feature Engineering

Monsoon’s feature engineering pipeline uses a unique blend of domain knowledge and statistical inferencing to build thousands of features from each dataset capturing complex patterns that link data with response variables.

Feature Selection

Our state-of-the-art feature selection pipelines , some of which are proprietary, can distinguish between noise and genuinely powerful features that hold across time and sample. Only these features are selected for the final model.

Modeling

After optimizing hyperparameters over massive search spaces, multiple models are built and ensembled, optimizing a specific function picked from a range of cost-functions to achieve a “fit” with the business problem.

Validation

The candidate models are then validated across time and sub-sample to ensure that not only does the final model perform well across time, but that it also works well across different segments of the population it is being built for.

ML MODEL

The final model that is picked by our pipelines is then tested thoroughly to ensure that all constraints around latency, size, response and target deployment environments are catered to adequately.

What kind of data is used to build models?

Financial Data

Credit History

Bank Statements

Application data

Alternate Data

SMS data

Fastag Data

UPI data

GST Data

Past repayment behavior

Bureau Scrubs

On-book repayments

Custom- Flags

Past repayment behavior

Bureau Scrubs

On-book repayments

Custom- Flags

It has survived not only five centuries, but also the leap into electronic typesetting, remaining essentially unchanged.Lorem Ipsum is simply dummy text of the printing and typesetting industry. Lorem Ipsum has been the industry’s standard dummy text ever since the 1500s, when an unknown printer took a galley of type and scrambled it to make a type specimen book.

Predictions

as a Service

Bespoke

Commercial

Arrangement

Subscription to

Thoth to create

your own models and

scorecards

Lorem Ipsum

How A model can be consumed

(AWS/Azure/GCP)

bank’s firewall

an exposed API

Our models (whether custom built or not) are always exposed via a RESTful API. The model can be hosted on any of the 3 major Cloud Platforms (AWS/Azure/GCP) in a variety of ways both on the Public Cloud as well as a Private Cloud with full-control given to the lender e.g.:

- In Containers (e.g. Azure Container Instances)

- In a Serverless manner (e.g. AWS Lambda)

- On Virtual machines